Scanner

The scanner lab orchestrates repeatable HTTP checks. Jobs fetch upstream resources, capture full responses, and surface deltas in the dashboard.

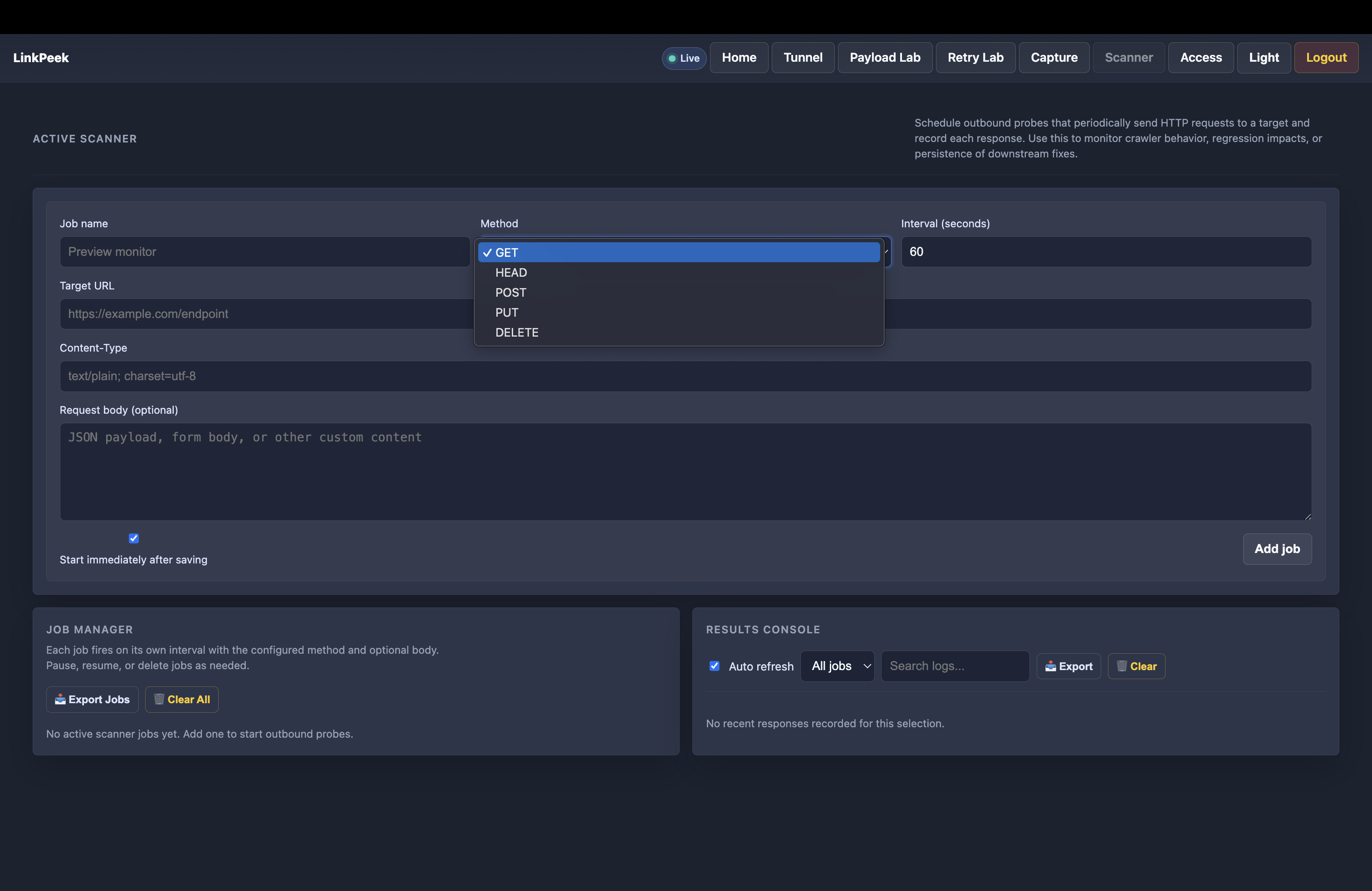

Create a job

Section titled “Create a job”- Navigate to Scanner → New job.

- Enter the target URL and optional body payload.

- Configure headers, user agent, and authentication as needed.

- Set the interval in seconds. Leave the “Start immediately after saving” box checked for an instant run, or clear it to stage the job before turning it on.

Jobs execute once right after creation when the start toggle is enabled, then repeat on the configured interval.

After scheduling a job, review the Inspect results section to confirm deltas and response metadata update per run.

Inspect results

Section titled “Inspect results”- Each run records the HTTP status, duration, and response size alongside a timestamped snippet.

- Filter the feed by job or search within URLs, snippets, and error text to focus on specific activity.

- Use the toolbar downloads (

📥 NDJSON,📥 JSON,📄 PDF) for archival exports.

Manual controls

Section titled “Manual controls”- Export jobs: Download the current definitions as JSON for backups or migrations.

- Clear all jobs / logs: Remove definitions or historical runs when you want a clean slate (confirmation required).

- Per-job delete buttons: Remove individual jobs directly from each card.

- Auto refresh toggle: Pause background polling while investigating older results.

Automation hooks

Section titled “Automation hooks”- Subscribe to the

scanner.jobstopic for definition updates andscanner.resultsfor the rolling run feed. - Poll

GET /api/scanner/jobsfor metadata orGET /api/scanner/results?n=100for recent executions.

Jobs persist in Postgres. Back up the database regularly if you use scanner history for compliance or long-term monitoring.